There’s a common enemy amongst network engineers and software developers, and that enemy is latency. I’m sure we’ve all heard about it before, and all too often requests for circuit quotes are accompanied with the statement, “the circuit must have X latency or less, otherwise the application won’t work.” It seems to make sense, but is latency really the determining factor for application performance? We’ll be discussing this concept over a series of articles. Bear with me, this might get technical …

Bandwidth vs Latency

First, let’s understand the difference between bandwidth and latency. All too often, I hear this used interchangeably, but they are not the same. Bandwidth refers to the maximum capacity of a connection whereas latency represents how long it takes for your packet to get to its destination. Since the internet is often referred to the information superhighway, I’ll use a highway to better explain.

Imagine a highway with two lanes connecting two cities 100 miles apart with a speed limit of 100mph. The maximum number of cars that can simultaneously drive down the highway is two cars — this represents the bandwidth. Because the speed limit is 100mph, it will take those two cars exactly 1 hour to drive from one city to the next. That one hour represents the latency for this highway (or circuit).

Impact of Physics on Latency

You’ll notice that I added the concept of a speed limit into the aforementioned metaphor. This is because networks also have a real-world speed limit — the speed of light. No matter the type of connection, we are all chained by physics, and until we are able to send packets faster than the speed of light, there is always going to be a minimum latency threshold that we simply cannot break. In fact, you can approximate latency in with a simple understanding that light travels at approximately 186 miles per millisecond (186 miles/ms). It’s also worth noting this is the speed of light inside a vacuum — meaning there are no other factors that could hinder the transmission of light.

Utilizing the example above and applying a fiber circuit (which shoots packets in the form of light), you would use this formula: 100 = 186 * (X)milliseconds. This would tell you that it would take roughly 0.53 ms for a packet to go 100 miles.

This also means a packet from New York to Los Angeles (roughly 2,451 miles) would take approximately 13 ms in a vacuum.

Some of you telecom veterans will see the 13ms and think, “I’ve never seen a 13ms link between New York and Los Angeles,” and you would not be wrong. This is because there are other several factors in play:

- 2,451 miles is “as the bird flies” or the shortest distance between New York and Los Angeles. Essentially it’s as if you pulled a fiber cable directly in a straight shot across the U.S. Here’s the problem: circuits simply are not laid out that way. The circuits we use are all dependent on the infrastructure laid out by the carriers, and they will typically hop from one datacenter to the next across the U.S. until it gets to the destination. It will never be a straight shot.

- There are other forms of latency. We’ve only been talking about network latency. There is ram latency, CPU latency, disk latency and more. Routers, switches and servers all have their own latency measurements, and the common thread is these latencies create some type of bottleneck that can result in a “delay.” In the computing world, all of these types of latencies/delays (while small and only a few milliseconds) can add up and potentially create noticeable increases in overall latency.

Asking the Right Questions

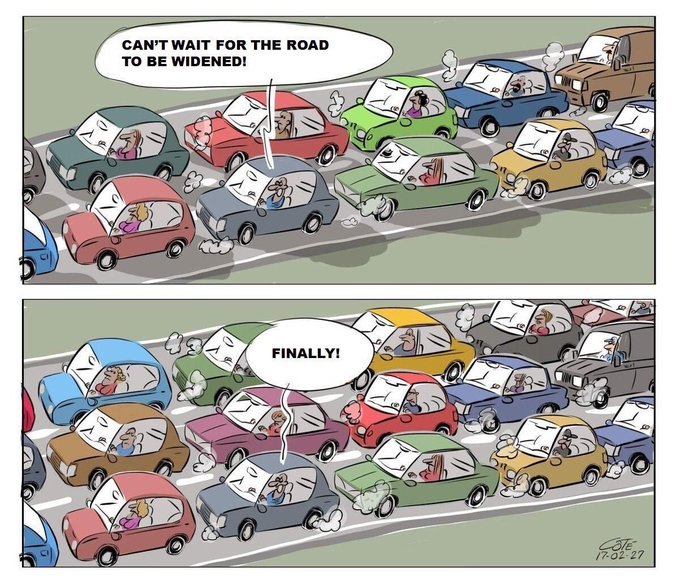

I’m diving deep into understanding the physics behind latency because it builds the framework for understanding the relationship between latency and performance. I’ve seen several cases where latency-related performance issues are diagnosed as bandwidth-related performance issues and vice versa. Often times, I have seen companies request more bandwidth in an attempt to improve performance for latency-related issues. When it happens, it makes me think of the following cartoon:

As you can see, increasing the bandwidth doesn’t necessarily improve the times it takes for a car to drive from one city to another. It merely means more cars can drive from one city to another in the same amount of time. Therefore it’s important to ask the right questions during a discovery session to fully pinpoint and understand what the customer is trying to accomplish and why their application is not performing well. Sometimes it’s not always bandwidth; sometimes it’s not always latency. In Part 2 of this series, I’ll dive into additional factors that can affect performance.